Website Parsing

Website parsing is an innovative approach to improving how research is conducted on websites, including for UX research, by identifying website elements and building tasks around them! Learn more about this iFrame-based approach and its benefits.

Website parsing is an innovative approach to improving how research is conducted on websites, including for UX research, by identifying website elements and building tasks around them! Learn more about this iFrame-based approach and its benefits.

Contents:

Background

Let’s say you are conducting research and you need to track user behavior. Tracking what the user is doing, clicking on, and interacting with is important for many facets of user behavior research, including UX, ecommerce, and even policy making.

However, for this data to be captured and reported, code needs to be delivered to the user-side in order to track and measure behaviors and interactions. But doing this isn’t so simple.

Challenge

Injecting code into websites in order to measure user behavior is challenging for several reasons:

- It requires server access and code alterations can compromise the website, potentially even reducing website traffic.

- Injecting code requires advanced IT personnel which can increase costs for small businesses.

- Utilizing plugins may be a solution but is also limiting as it requires additional software installations.

- Another possibility for overcoming this challenge is passively recording a tab and treating it as an image for analysis. Ie, you show the live website and the recording takes place in a different tab but this approach totally forgoes the ability to track user behavior as it is not viable to track clicks for a different browser tab.

Solution by PageGazer

PageGazer innovates in this realm by cloning the live website for the overall aim of testing user behavior. By cloning the website and showing it within PageGazer, researchers can identify page elements of interest and use them as the basis of their research project. Website parsing treats all elements on the displayed page as unique elements and allows you to create research tasks around them.

Proxied iFrame Technology

To accomplish this, an augmented iFrame is behind the technology. This goes beyond the typical iFrame which while it can grab the code of another website and display it, it doesn’t give you the control to manipulate website elements.

Our server grabs the website by proxying. Then, when a user clicks somewhere, the click goes to another server (such as eBay) and then this is routed via proxy moving through our server and displaying the subsequent outcome within PageGazer. By routing via proxy to our server, this allows us to inject any code that can be further used for website research, such as that which enables eye tracking or A/B testing. By utilizing proxied iFrame technology the neural network behind PageGazer can function properly by using the parse website data combined with physiological data (from eye tracking and mouse tracking data) to generate insights. All of this would not be possible without the proxied iFrame.

Features

Automatic AOIs Detection & Categorization

Areas of Interest (AOIs) are identified and categorized automatically through this combination of website parsing and neural networks to detect all page elements, like buttons, text, images, and links.

Through fingerprinting, each website element is assigned with a unique id. This also allows you to manipulate that element for A/B testing by uploading image variations and assessing which design performs better according to your set goals or standards. This approach also allows you to use these AoIs as the basis of your study by creating guided task scenarios where the user’s interactions are recorded in an element-specific manner. This also enables easy data aggregation across participants, such as number of fixations or clicks over the particular AoI, strongly improving the validity of the findings.

Data Aggregation & Metrics

Since each website element or AOI is treated as a unique website element due to its automatic id assignment, this allows for powerful data metrics to be collected, as well as data aggregation to occur.

For example, when an AOI is clicked after a study has been completed, metrics are listed for that particular AOI, including gaze and fixations from eye tracking. This is shown in the image below where the indicated image (with the red border) is selected and the metrics are shown in the upper left corner, listing important metrics like total fixations:

To enable data aggregation, code is running in the background to register a click by attaching an event handler (to measure actions like clicking and scrolling). Then, based on these events, the neural network allows for behavioral data aggregation across all devices for seamless data analysis.

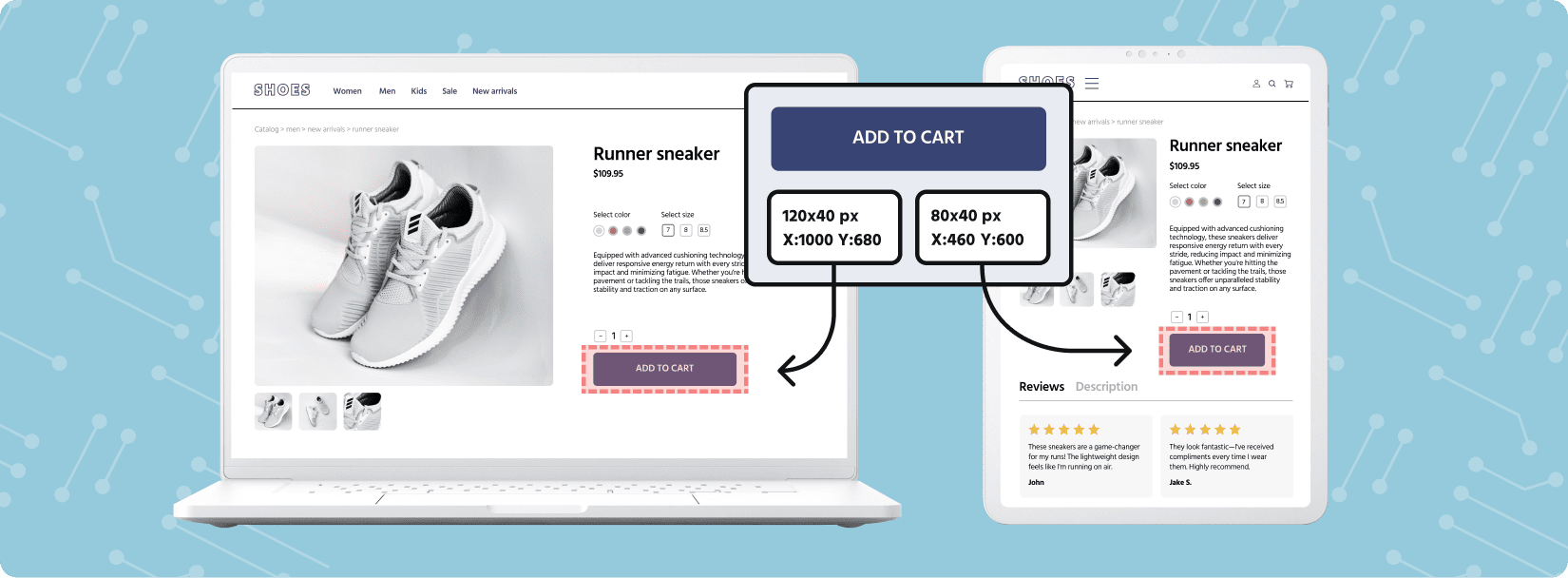

Screen Reflow

As a result of this technology, website behavior can be studied no matter the size of the screen or window. Elements, such as images or text, are identified with a unique id no matter where their location is. This opens the door for flexibility and allows researchers to test several design variations all the while being able to collect data for that specific element.

Conclusion

Being able to identify important website content, such as buttons and images, and treating them as unique elements is crucial for effective website research. Website parsing is an innovative approach taken by PageGazer in order to make this possible and allow for data aggregation and powerful task building.

This project has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement No 951847.

This project has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement No 951847.